39

views

views

The gap between aspirational AI principles and operational reality is where risks fester – ethical breaches, regulatory fines, brand damage, and failed deployments.

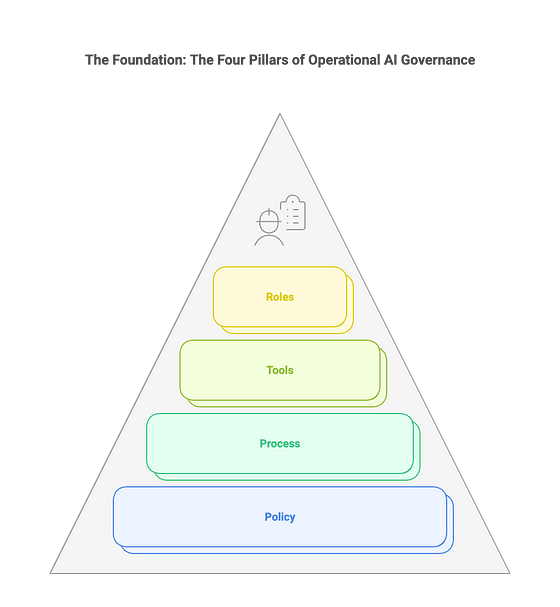

An effective MVG framework isn’t a single document; it’s an integrated system resting on four critical pillars. Neglect any one, and the structure collapses.

- Policy Pillar: The “What” and “Why” — Setting the Rules of the Road

- Purpose: Defines the organization’s binding commitments, standards, and expectations for responsible AI development, deployment, and use.

- Core Components:

- Risk Classification Schema: A clear system for categorizing AI applications based on potential impact (e.g., High-Risk: Hiring, Credit Scoring, Critical Infrastructure; Medium-Risk: Internal Process Automation; Low-Risk: Basic Chatbots). This dictates the level of governance scrutiny. (e.g., Align with NIST AI RMF or EU AI Act categories).

- Core Mandatory Requirements: Specific, non-negotiable obligations applicable to all AI projects. Examples:

- Human Oversight: Define acceptable levels of human-in-the-loop, on-the-loop, or review for different risk classes.

- Fairness & Bias Mitigation: Requirements for impact assessments, testing metrics (e.g., demographic parity difference, equal opportunity difference), and mitigation steps.

- Transparency & Explainability: Minimum standards for model documentation (e.g., datasheets, model cards), user notifications, and explainability techniques required based on risk.

- Robustness, Safety & Security: Requirements for adversarial testing, accuracy thresholds, drift monitoring, and secure

Read More: From Principles to Playbook: Build an AI-Governance Framework in 30 Days

Read More Articles:

Follow Nate Patel for More on AI Strategy and Ethical Innovation:

🔹 LinkedIn: linkedin.com/in/npofc

🔹 X (formerly Twitter): x.com/npatelofc

🔹 Instagram: instagram.com/natepatel.aicpto

Stay connected to discover the latest in AI insights, enterprise strategy, and future-focused keynotes.

Comments

0 comment